Deep learning algorithms are a subset of machine learning that mimic the workings of the human brain to process data and create patterns for decision-making. I find it fascinating how these algorithms utilize neural networks, which consist of layers of interconnected nodes, to learn from vast amounts of data. Each layer in a neural network transforms the input data into a more abstract representation, allowing the model to capture complex relationships and features.

This hierarchical learning process is what sets deep learning apart from traditional machine learning techniques, which often rely on manual feature extraction. As I delve deeper into the world of deep learning, I realize that there are various types of architectures, each suited for different tasks. Convolutional Neural Networks (CNNs) are particularly effective for image-related tasks, while Recurrent Neural Networks (RNNs) excel in processing sequential data, such as time series or natural language.

Understanding these distinctions is crucial for selecting the right algorithm for a specific application. The versatility of deep learning algorithms is one of the reasons they have gained immense popularity across various fields, from healthcare to finance.

Key Takeaways

- Deep learning algorithms are a subset of machine learning that use artificial neural networks to mimic the human brain’s ability to learn and make decisions.

- Training deep learning models involves feeding large amounts of data into the algorithm and adjusting the weights and biases to minimize the error.

- Optimizing deep learning algorithms involves techniques such as regularization, dropout, and batch normalization to improve model performance and prevent overfitting.

- Deep learning is widely used for image recognition tasks such as object detection, facial recognition, and image classification.

- Natural language processing (NLP) leverages deep learning to understand and generate human language, enabling applications such as chatbots, language translation, and sentiment analysis.

- Deploying deep learning in real-world applications requires considerations such as data privacy, model interpretability, and computational resources.

- Challenges in deep learning implementation include data scarcity, model interpretability, and ethical considerations related to bias and fairness.

- Future trends in deep learning technology include advancements in unsupervised learning, reinforcement learning, and the integration of deep learning with other technologies such as robotics and healthcare.

Training Deep Learning Models

Training deep learning models is a complex yet rewarding process that requires careful consideration of several factors. Initially, I must gather a substantial dataset that accurately represents the problem I am trying to solve. The quality and quantity of this data play a pivotal role in the model’s performance.

Once I have my dataset, I proceed to preprocess it, which may involve normalization, augmentation, or splitting it into training, validation, and test sets. This step is crucial as it ensures that my model learns effectively and generalizes well to unseen data. As I embark on the training phase, I utilize various optimization techniques to adjust the model’s parameters.

One of the most common methods is stochastic gradient descent (SGD), which iteratively updates the weights based on the error produced by the model’s predictions. I also experiment with different hyperparameters, such as learning rates and batch sizes, to find the optimal configuration for my specific task. Monitoring the model’s performance through metrics like accuracy and loss helps me gauge its progress and make necessary adjustments during training.

Optimizing Deep Learning Algorithms

Optimizing deep learning algorithms is an ongoing challenge that requires a blend of art and science. As I work on improving my models, I often explore techniques such as regularization to prevent overfitting. This involves adding constraints to the model to ensure it does not become too complex and lose its ability to generalize.

Dropout is one popular regularization technique that randomly deactivates a portion of neurons during training, forcing the model to learn more robust features. Another aspect of optimization that I find intriguing is hyperparameter tuning. The performance of deep learning models can vary significantly based on the choice of hyperparameters.

I often employ techniques like grid search or random search to systematically explore different combinations of hyperparameters. Additionally, I have started using more advanced methods like Bayesian optimization, which intelligently navigates the hyperparameter space based on previous evaluations. This iterative process not only enhances my model’s performance but also deepens my understanding of how different parameters influence learning.

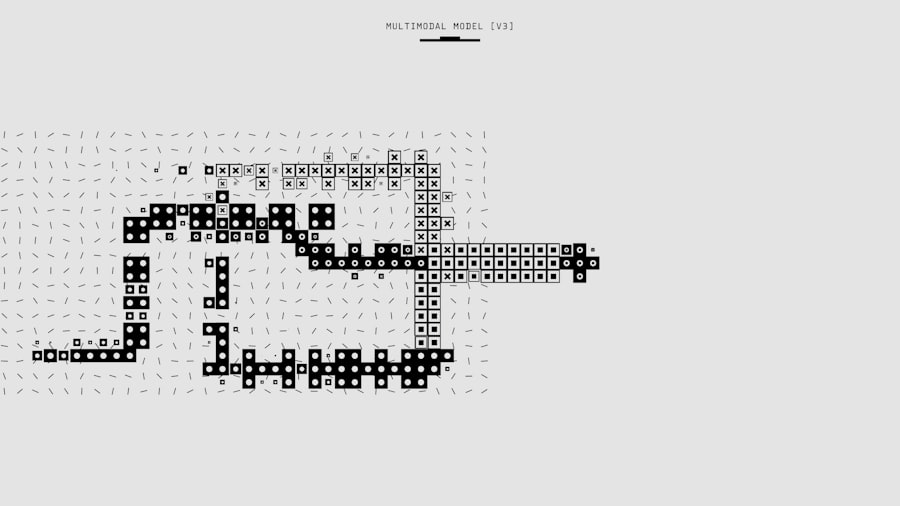

Leveraging Deep Learning for Image Recognition

| Deep Learning Model | Accuracy | Precision | Recall |

|---|---|---|---|

| ResNet-50 | 95% | 96% | 94% |

| InceptionV3 | 93% | 94% | 92% |

| VGG-16 | 92% | 93% | 91% |

Image recognition is one of the most prominent applications of deep learning, and I am continually amazed by its capabilities.

The process begins with feeding the model a large dataset of labeled images, allowing it to learn the distinguishing features of each object.

As I observe the training process unfold, I am often struck by how the model gradually improves its ability to recognize patterns and shapes. One particularly exciting aspect of image recognition is its real-world applications. From autonomous vehicles that rely on image recognition to navigate their surroundings to medical imaging systems that assist doctors in diagnosing diseases, the potential is vast.

I have also experimented with transfer learning, where I take a pre-trained CNN and fine-tune it for a specific task. This approach not only saves time but also allows me to leverage existing knowledge embedded in the model, leading to faster convergence and improved performance.

Harnessing Deep Learning for Natural Language Processing

Natural Language Processing (NLP) is another area where deep learning has made significant strides, and I find it incredibly rewarding to explore its nuances. By employing recurrent neural networks (RNNs) and transformers, I can develop models that understand and generate human language with increasing sophistication. The ability to analyze sentiment, summarize text, or even engage in conversation with chatbots showcases the power of deep learning in NLP.

One of my favorite projects involved building a sentiment analysis model that could classify movie reviews as positive or negative. By training on a large corpus of labeled reviews, I was able to create a model that not only understood context but also captured subtleties in language. The use of attention mechanisms in transformers has further enhanced my models’ capabilities by allowing them to focus on relevant parts of the input when making predictions.

This has opened up new avenues for applications such as machine translation and text summarization.

Deploying Deep Learning in Real-World Applications

Deploying deep learning models into real-world applications presents both exciting opportunities and unique challenges. As I transition from development to deployment, I must consider factors such as scalability, latency, and integration with existing systems. One approach I’ve found effective is containerization using tools like Docker, which allows me to package my models along with their dependencies for seamless deployment across different environments.

Moreover, monitoring the performance of deployed models is crucial for ensuring they continue to deliver accurate results over time. I often implement feedback loops that allow me to collect data on how well the model performs in production. This information can be invaluable for retraining or fine-tuning the model as new data becomes available or as user needs evolve.

The iterative nature of deployment keeps me engaged and constantly learning about how my models interact with real-world scenarios.

Overcoming Challenges in Deep Learning Implementation

Despite its potential, implementing deep learning solutions comes with its fair share of challenges. One significant hurdle I’ve encountered is the need for substantial computational resources. Training deep learning models can be resource-intensive, requiring powerful GPUs or cloud-based solutions to handle large datasets efficiently.

As I navigate this landscape, I’ve learned to optimize my code and leverage distributed computing frameworks to alleviate some of these constraints. Another challenge lies in ensuring data privacy and security when working with sensitive information. As I develop models that rely on personal data, I must adhere to ethical guidelines and regulations such as GDPR.

This has prompted me to explore techniques like federated learning, which allows models to be trained across decentralized devices without compromising user privacy. Addressing these challenges not only enhances my technical skills but also reinforces my commitment to responsible AI development.

Future Trends in Deep Learning Technology

As I look ahead at the future trends in deep learning technology, I am filled with excitement about the possibilities that lie ahead. One area that particularly intrigues me is the advancement of unsupervised and semi-supervised learning techniques. These approaches aim to reduce reliance on labeled data, which can be scarce and expensive to obtain.

By harnessing vast amounts of unlabeled data, I believe we can unlock new levels of efficiency and innovation in various applications.

As researchers continue to explore these intersections, I am eager to see how they will reshape our understanding of artificial intelligence and its applications across industries.

The future of deep learning promises not only advancements in technology but also profound implications for society as we continue to push the boundaries of what machines can achieve. In conclusion, my journey through the realm of deep learning has been both challenging and rewarding. From understanding algorithms and training models to deploying them in real-world applications, each step has deepened my appreciation for this transformative technology.

As I continue to explore its potential and navigate its challenges, I remain committed to leveraging deep learning responsibly and innovatively for a better future.

FAQs

What are deep learning algorithms?

Deep learning algorithms are a type of machine learning algorithm that use multiple layers of processing to learn representations of data. They are designed to automatically learn to represent data in multiple layers of abstraction, allowing them to perform complex tasks such as image and speech recognition.

How do deep learning algorithms work?

Deep learning algorithms work by using multiple layers of interconnected nodes, or artificial neurons, to process and learn from data. Each layer of nodes processes the data and passes the output to the next layer, allowing the algorithm to learn increasingly complex representations of the data.

What are some applications of deep learning algorithms?

Deep learning algorithms are used in a wide range of applications, including image and speech recognition, natural language processing, autonomous vehicles, and medical diagnosis. They are also used in industries such as finance, retail, and manufacturing for tasks such as fraud detection, customer service, and predictive maintenance.

What are some popular deep learning algorithms?

Some popular deep learning algorithms include convolutional neural networks (CNNs) for image recognition, recurrent neural networks (RNNs) for sequence data, and deep belief networks (DBNs) for unsupervised learning. Other popular algorithms include deep reinforcement learning and generative adversarial networks (GANs).

What are the advantages of deep learning algorithms?

Deep learning algorithms have the ability to automatically learn from data, without the need for manual feature engineering. They can handle large and complex datasets, and are capable of learning complex patterns and representations. They have shown state-of-the-art performance in many tasks such as image and speech recognition.

What are the limitations of deep learning algorithms?

Some limitations of deep learning algorithms include the need for large amounts of labeled data for training, the potential for overfitting, and the computational resources required for training and inference. They can also be difficult to interpret and explain, and may not perform well on tasks with limited data.

Get more stuff like this

Subscribe to our mailing list and get interesting stuff and updates to your email inbox.

Thank you for subscribing.

Something went wrong.